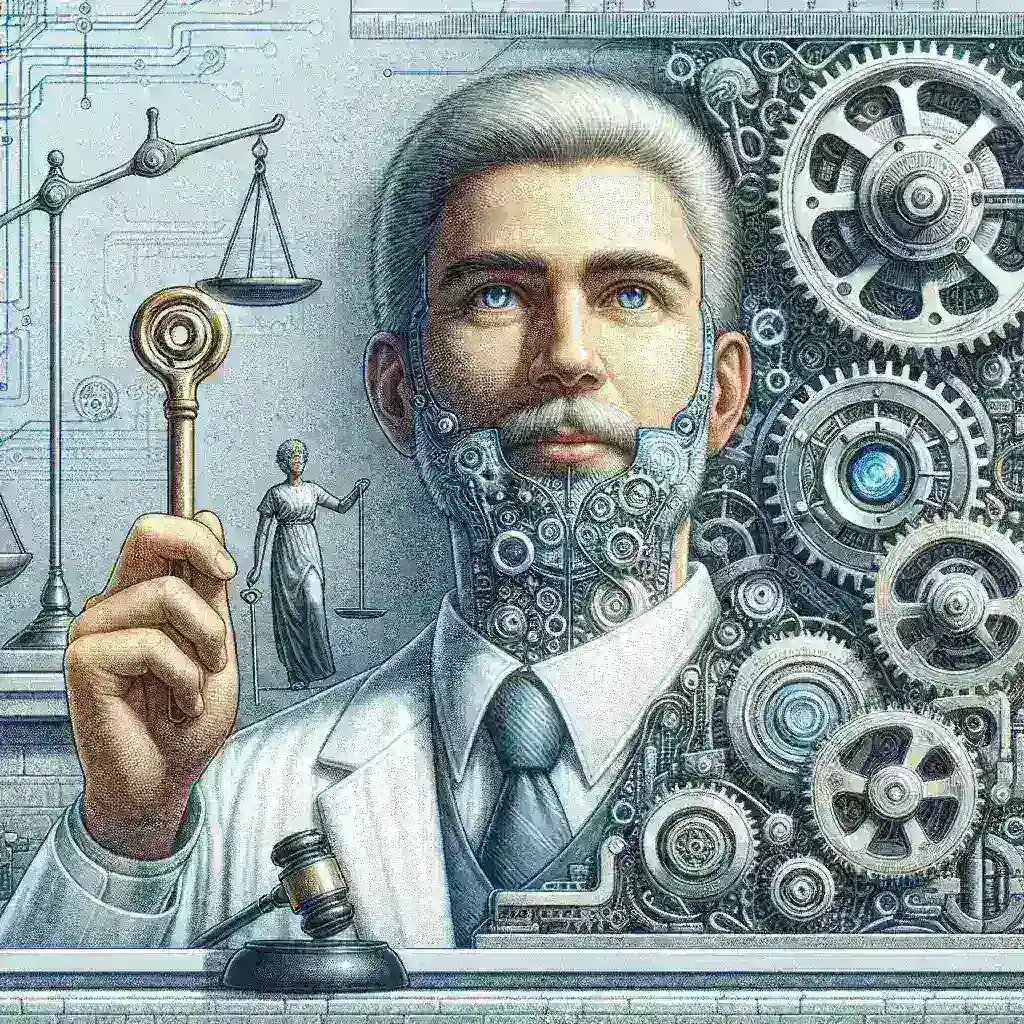

Microsoft Scientist: Smart Regulation Is Key to Unleashing AI’s Potential

A New Perspective on AI Regulation: Smart Rules, Not Stifling Ones

The rapid advancement of artificial intelligence (AI) has sparked both immense excitement and considerable apprehension. While AI promises transformative benefits across numerous sectors, concerns about ethical implications, job displacement, and potential misuse are equally valid. This article delves into the perspective of a Microsoft scientist who champions a novel approach: smart regulation that actively promotes responsible AI development, rather than hindering its progress.

The traditional narrative often paints regulation as a restrictive force, a barrier to innovation. However, this Microsoft scientist proposes a different viewpoint, arguing that thoughtful, strategic regulation can actually boost AI development by establishing clear ethical guidelines, fostering trust among users, and encouraging responsible innovation. This isn’t about stifling progress; it’s about guiding it toward a beneficial future.

The Case for Smart Regulation: A Balancing Act

The scientist’s argument rests on several key pillars. Firstly, clear guidelines are essential for addressing ethical concerns surrounding AI. These concerns span a wide range, from algorithmic bias and discrimination to privacy violations and the potential for autonomous weapons systems. Smart regulation provides a framework for mitigating these risks, ensuring that AI systems are developed and deployed responsibly.

Secondly, trust is paramount. Public acceptance of AI technologies is crucial for their widespread adoption. Regulation that demonstrates a commitment to transparency, accountability, and fairness can build public confidence, reducing skepticism and fear. This, in turn, creates a more fertile environment for AI innovation.

Thirdly, smart regulation fosters a level playing field. By setting clear rules and standards, it prevents unfair competition and ensures that all players, large and small, operate within a shared framework of ethical and responsible practices. This encourages a healthy and robust AI ecosystem.

Navigating the Complexities: Specific Examples

The scientist doesn’t advocate for vague, overly broad regulations. Instead, they emphasize the importance of narrowly tailored rules that address specific challenges. For example, regulations concerning data privacy are crucial for protecting individual rights and building trust. Similarly, guidelines on algorithmic transparency can help ensure fair and equitable outcomes. The focus is not on suppressing innovation but on directing it along ethical pathways.

Consider the example of self-driving cars. Robust safety regulations are vital to ensure that these technologies are deployed safely and responsibly. However, these regulations should be carefully designed to avoid stifling innovation. A balance is needed between rigorous safety standards and the freedom to develop and test new technologies.

Similarly, in the realm of healthcare, AI holds tremendous potential for improving diagnoses, treatment, and patient care. However, regulations are necessary to ensure data security, patient privacy, and the accuracy and reliability of AI-powered medical devices. Here again, the key is finding the right balance between responsible innovation and necessary safeguards.

Beyond the Headlines: Addressing Misconceptions

One common misconception is that regulation invariably slows down progress. The scientist argues that this is a false dichotomy. Unfettered technological advancement without ethical considerations can lead to unforeseen consequences. Thoughtful regulation, however, can actually accelerate innovation by reducing uncertainty, establishing clear expectations, and fostering collaboration among stakeholders.

Another misconception is that regulation is inherently bureaucratic and cumbersome. While navigating regulatory processes can be challenging, the long-term benefits of responsible AI development outweigh the short-term inconveniences. Furthermore, efficient and streamlined regulatory frameworks can minimize bureaucratic hurdles while still effectively addressing critical issues.

Looking Ahead: The Future of AI and Regulation

The scientist’s vision for the future is optimistic but cautious. They believe that smart regulation will be essential in ensuring that AI benefits all of humanity, not just a select few. This requires ongoing dialogue and collaboration among researchers, policymakers, and the public. It demands a willingness to adapt and refine regulations as AI technology continues to evolve.

This will require a commitment to ongoing monitoring and evaluation of regulatory frameworks. As AI technologies advance, regulations must also adapt to address new challenges and opportunities. This requires a flexible and responsive approach, recognizing that no single set of rules can cover all aspects of AI development and deployment forever.

Furthermore, international cooperation will be vital. AI is a global phenomenon, and its development and application transcend national borders. Harmonizing regulations across different countries will prevent regulatory arbitrage and ensure that ethical standards are applied consistently worldwide. This will require significant diplomatic effort and a shared commitment to responsible AI governance.

The Role of Stakeholders: A Collaborative Approach

The responsibility for fostering responsible AI development doesn’t rest solely on policymakers. Researchers, developers, and businesses have an ethical obligation to prioritize safety, fairness, and transparency. This includes actively engaging in discussions about AI ethics and contributing to the development of effective regulatory frameworks. Transparency in algorithms and data usage is paramount.

The public, too, plays a vital role. Informed citizens can demand accountability from developers and policymakers, ensuring that AI technologies are used ethically and for the benefit of society. Education and public awareness are crucial in fostering a shared understanding of the benefits and risks of AI.

Ultimately, the success of smart AI regulation hinges on collaboration. By working together, researchers, policymakers, businesses, and the public can shape the future of AI in a way that promotes innovation while safeguarding ethical principles.

Real-World Examples of Effective AI Regulation

Several countries and regions have already begun to explore different approaches to AI regulation. The European Union’s AI Act, for example, aims to establish a comprehensive regulatory framework for AI systems, classifying them based on their risk level and imposing different requirements accordingly. This approach demonstrates a commitment to balancing innovation with responsible development. While still under development, its principles are informing debates globally.

Similarly, initiatives in countries like Canada and Singapore are focusing on fostering innovation while addressing ethical concerns. These efforts demonstrate that it’s possible to build robust regulatory frameworks that don’t stifle progress but, instead, guide it toward a more positive and beneficial future. The key is collaborative, iterative development.

The Microsoft scientist’s perspective underscores the crucial need for a nuanced and adaptive approach to AI regulation. Smart regulation isn’t about imposing restrictions; it’s about enabling responsible innovation, fostering public trust, and ensuring that AI benefits all of humanity.

It’s about striking a careful balance: fostering the rapid advancements shaping our world while establishing ethical guardrails and promoting accountability. This isn’t a simple task, but it’s a crucial one. The future of AI depends on it.

For more information on AI ethics and regulation, you can refer to resources like the OECD’s work on AI and the Brookings Institution’s research on AI.